The New Machine: from Logic to Organization

Teresa Numerico

This paper has a twofold aim. On the one hand, I wish to illustrate a new perspective of logic that Alan Turing discussed in public and private communications after the results he had obtained in the 1930s. In particular, I shall underline his controversial relationship with the strictly Hilbertian approach to mathematical logic. I shall also show an often forgotten vision of machine intelligence proposed by Turing himself in a Report on Intelligent Machinery written in 1948, where he was more explicit than usual about his experiments concerning machine intelligence, and described a stimulating idea about unorganized machines and their potential intelligent behaviours. An analysis of the report allows us to perceive the dilemma relating to machine intelligence that is at the centre of Turing’s reflections: the machine as the ‘queen’ of discipline against the machine with some kind of initiative accepting the risk of making mistakes. The concept of organization at the core of the definition of unorganized, self-modifying machines developed in this paper is only loosely connected with that of the logic which was the basis of the theory of computability results in the 1930s. However, it is closely linked with various modern ideas such as interaction, just-in-time processes, order, disorder and communication. The Hilbertian programme on logic did not develop any of these concepts, yet they are included in the suite of essential tools used by computer science.

I shall also show the connections and discrepancies between Turing’s new approach to machine intelligence and other similar contemporary positions such as the cybernetics vision of Norbert Wiener and John von Neumann’s project for building self-reproducing automata. I shall argue that there was a second ‘confluence of ideas’ in the late 1940s related to the importance of critical concepts such as order, disorder, random processes, error-checking, organization and self-modifying devices for the design of the first generation of intelligent machinery.

The confluence of ideas on machines and logic in the late 1940s

My hypothesis in this paper is that around 1948 there occurred a clear second confluence of ideas about the best way of building machines to behave in a way that could be considered not mechanical, a new type of machine which would display some characteristics more commonly found in human beings than in mechanical devices. It is likely that this confluence was not the output of any explicit reciprocal influence of the authors of the crucial works, but it was ‘in the air’, although they did have some contact with each other. Alan Turing, John von Neumann and Norbert Wiener were the first to understand and discuss this new insight, although they maintained many differences in the deployment of the future machine of their dreams. The common characteristics of this perspective were the critique of traditional logic as the only theoretical tool to guide the project and the plan for a new kind of machine. We shall see the different perspectives adopted by these scientists in the following sections, but I would anticipate that the major differences depended on the aims of the machines. Turing wanted to create an intelligent machine, capable of accomplishing tasks considered appropriate to human beings only; Wiener was interested in machines able to communicate effectively with other machines, human beings or the outer world in general; von Neumann was very fond of the idea of creating a self-reproducing automaton—a device that would self-replicate, maintaining its level of complexity or even increasing it with each new generation.

The works in which they discussed their ideas were all written or discussed in 1948. Turing wrote ‘Intelligent Machinery’ the report that showed his achievements during his sabbatical year at Cambridge (Turing 1948)—published for the first time only 20 years later. Here he openly discussed his ideas about a new type of intelligent machinery that was not limited to acting according to its logic alone, but was capable of self-organization, learning and individual initiative. In a subsequent paper (Turing 1950) on the same subject, published in the prestigious review Mind, he was much more cautious than in his prior report, having to justify every sentence following scientific best practice, whereas previously he had based his investigations only on hints and insights and had no proof to demonstrate the correctness of his vision.

In September 1948, von Neumann gave a talk on the ‘General and Logical Theory of Automata’ at the Hixon Symposium on Cerebral mechanisms in behaviour. This event was the starting point of his new research on automata, their characteristics and their potential that was to be a central part of his interest until his death in 1957. His project was the construction of a self-reproducing artificial automaton, capable of regenerating an automaton as complex as itself or even more so, as happens in biological reproduction. In order to achieve his goal, von Neumann had to elaborate a new logic, a tool capable of taking into account the complexity of such automata, and the imprecise measurement of reproduction, tolerating errors, without blocking the entire process.

In the same year, Norbert Wiener published his famous and seminal book Cybernetics: or control and communication in the animal and in the machine (Wiener 1948) in which he abridged a complete vision of this new science concerning communication and feedback between human beings and machines. He based his approach on the ability to interact between devices built according to models of neurological organs capable of effective reactions in response to the stimuli of the environment. Here, then, logic could only be a partial tool useful in the context of only some situations where it was possible to describe and measure precisely all the variables involved in the process.

The role of intuition and ingenuity in logic according to Turing

Turing’s challenge to the Hilbertian Programme on logic stretched back to the years he had spent at Princeton in the US working on his Ph.D. thesis under the supervision of Alonzo Church (1936–1938). In a section dedicated to the purpose of his paper on ordinal logics (Turing 1939), he declared his position on the role of intuition and ingenuity in logic, affirming that it was not possible to find ‘a formal logic which wholly eliminates the necessity of using intuition’ (Turing 1939/2004: 193). The definition of the ‘activity of intuition’ was not very clear, being loosely described as the capacity of ‘making spontaneous judgements which are not the result of conscious trains of reasoning’ (Ivi, 192). The role of ingenuity was connected with intuition, because this faculty consists—according to Turing—‘in aiding intuition through suitable arrangement of propositions, and perhaps geometrical figures or drawing’ (Ibidem).

Ingenuity was a support for intuition, since with its heuristics methods it increases the validity of intuitive judgement so much that it can no longer be doubted. It is possible to affirm that starting from this paper, Turing changed his vision of the actual work within formal logic. From his perspective, intuition places a sort of bet on a guess about a conclusion, and ingenuity provides the support that renders the challenge plausible and believable. Not all conclusions of logic could be proved using only mechanical steps (steps needing neither intuition nor ingenuity); some were necessarily intuitive and impossible to demonstrate by other means. If the mathematician could keep those steps down to a minimum and follow some basic rules in building the proof, then the results would be correct ‘whenever the intuitive steps are correct’ (Ivi: 193).

The output of the 1939 paper can be clarified and integrated by a couple of letters written in 1940 to Max Newman, his professor at Cambridge, in which Turing shed more light on the major consequences of his American work with special regard to his attitude to the logic of foundations. The details of his original view (see Copeland 2004: 205-206) can be found only in this correspondence (published for the first time in Copeland 2004: 211-216), and are an important starting point for the correct interpretation of the approach to logic adopted by Turing in his research on intelligent machinery during the 1940s. Among the various ideas, cast at Newman and us, there are a few points that need further comment due to their peculiarity.

The first idea is contained in the first letter (written on the 21st April 1940, Copeland 2004: 211-213) and it relates to the desire not to make ‘proofs by hunting through enumerations for them, but by hitting on one and then checking up to see that it is right’. Here Turing showed that his attention was closer to the practice of mathematics than to the theoretical aspect of logic. His approach concentrated on the discovery of new proofs and not on preserving the formalism of demonstrations within the bounds of the parameters set by Hilbert’s view of formal logic. If we accept the idea of ‘hitting on a proof’, we have to admit as he suggests, ‘not one but many methods of checking up’. The emphasis here is more on the heuristics of discovery and on the action of proving than on the guarantee of formal proofs. The setting proposed is that of the mathematician, who is more interested in associating the right premises with the desired conclusion, and then establishes ‘the status of each proof’ in terms of the checking methods adopted, quantity of ingenuity involved and level of credibility achieved. Logic seems to change its objective in this letter, moving from a tool used to preserve truth and guarantee correctness throughout the demonstration, to a tool capable of obtaining results via a series of methods for checking their plausibility according to premises and inference rules.

The second passage that deserves our attention is in the second undated letter to Newman (Copeland 2004: 214–216) and regards the discussion of the usefulness of the Hilbertian attitude to mathematics. According to Turing, Newman’s approach is too radically Hilbertian compared to his own. The effect of this Hilbertian and mechanical approach would be the existence of a single machine to prove and check conclusions. The mathematician just needed to be accustomed to it and to accept the existence of propositions impossible to demonstrate. Turing proposed, instead, a different vision of logic that maintained the central role of the mathematician: he imagined ‘different machines allowing different sets of proofs, and by choosing a suitable machine one can approximate ‘truth’ by ‘provability’ better than with a less suitable machine […] the choice of proof checking machine involves intuition’ (Ibidem). The mention of different machines implied the existence of various possible sets of axioms and inference rules that could be used, and the choice of the right one entailed the expertise of the mathematician in picking out from the various possibilities the best possible machine that allowed the best possible approximation of truth by provability.

Logic again was shaped as a heuristics tool for demonstration and it is possible to interpret the various machines as various programs of intelligent machines. Turing’s experience of foundational logic produced several relevant results that he obtained beyond the negative solution of the Entscheidungsproblem. It was not possible to individuate a unique formal system in which all the interesting theorems could be proved; the mathematician needed to explore different systems, exploiting his expertise in order to achieve the proof. The proof itself could not display the same status of certitude at all times, because its plausibility was dependent on the checking method used and on the truth of the intuition on which it is based. This description of logic, completely new compared to the traditional approach of the foundational schools, opens the way to a new conception of machine, based on different possible programs, whose applications are the fruits of the choice of the mathematician or of the machine itself, provided that it can learn from experience and be trained to judge the effectiveness of the chosen rules and premises. This new perspective will have notable consequences on Turing’s later research, and in particular, it will be crucial in shaping the intelligent machine defined in his report of 1948.

The second Turing Machine as described in 1948

In 1945, at the end of WWII where Turing was engaged in code-breaking operations at Bletchley Park (see Copeland 2004: 217-264 for more details about Turing’s Wartime work), he accepted an offer to work for the National Physical Laboratory, being in charge of developing the first UK project to build an electronic computing machine, called ACE (Automatic Computing Engine). After the computing machine project’s commencement, Turing obtained permission for a sabbatical leave at Cambridge University where he spent the 1947/1948 academic year. At the end of this year he had to report on his achievements. The report discussed at the NPL was Intelligent Machinery (Turing 1948) published only in 1968 (Evans and Robertson 1968). Though the paper can be considered a seminal work in the Artificial Intelligence research field (that had not even been conceived as yet), it was severely criticised by Charles Darwin, the then director of the NPL, who was also upset with Turing’s decision to accept a job at Manchester University instead of keeping his promise to stay at the NPL after the sabbatical. The report is full of brilliant suggestions for the future of artificial intelligence; however in this paper I will concentrate on some issues that can be more easily associated with von Neumann’s and Wiener’s attitude towards machines.

Equivalence between the practical and the theoretical machine

Turing compared the human brain to a computer and admitted that while the brain is a continuous controlling machine, the computer is a discrete controlling machine. According to Longo (2007), a continuous controlling device was the confused definition for what is now called a dynamical system. In Turing’s opinion, it was possible to simulate a brain with a discrete controlling machine, without losing its essential properties, ‘however the property of being ‘discrete’ is only an advantage for the theoretical investigation, and serves no evolutionary purpose, so we could not expect Nature to assist us by producing truly ‘discrete’ brains’ (Turing 1948/2004: 412-413). In Longo’s interesting perspective on this description of the brain and its relation with the machine, the simulation of the brain by a machine cannot be interpreted as a model to be achieved by the machine. Turing seemed aware of the fact that the machine could cheat and pretend to be a brain (as in the Turing test, see Turing 1950/2004) without really following the same causal mechanisms of the brain’s workings. So the brain, being a dynamical system, could not be reproduced by a discrete system similar to a computer, but it could only be simulated by it, as Turing implicitly suggested in his work. If we accept this interpretation, we have to admit that Turing was not convinced of the computational model of intelligence, as many interpreters erroneously affirm. He was interested in performing intelligent tasks with a machine, but his belief consisted of two alternative views that were likely to be complementary. The first part of his belief required that the simulation of intelligence by a computer (based on the logical model that he contributed to creating) would not represent a model of intelligence similar to the human brain in the late 1940s. It was just a matter of ‘cheating’. The machine could perform activities that a jury, composed of non-expert people, could believe to be intelligent behaviours.

However Turing was inclined to believe that it was possible to reproduce human intelligence with a machine, but it could possibly be done only by a type of machine different from the computational model that was at the base of the electronic stored-program machine, though the two models shared some basic characteristics. In the next part I shall discuss the various tentative descriptions of the intelligent machines of the future as they were imagined by Turing, underlining the differences with the previous computational device that was already under construction with the collaboration of Turing himself.

Possible intelligent machines of the future

One of the most striking definitions of machines offered by Turing is introduced after a long list of other types of machines more similar to the computer such as ‘Logical Computing Machines’(LCM), ‘Practical Computing Machines’ (PCM), ‘Universal Practical Computing Machines’(UPCM), etc. The definition is rather loose:

We might instead consider what happens when we make up a machine in a comparatively unsystematic way from some kind of standard components. We could consider some particular machine of this nature and find out what sort of things it is likely to do. Machines which are largely random in their construction in this way will be called ‘unorganised machines’ (Turing 1948/2004: 416)

Unorganised machines, then, are machines that are not built for a specific purpose and their hardware structure is not planned precisely as with PCMs. The case of the UPCM is rather complicated and I shall discuss it later in the section. As far as unorganised machines are concerned, it is possible to acknowledge the fact that they are randomly put together, using similar elementary units connected without a precise reason. The structure of these machines is more similar to a network than to a computing machine in von Neumann or Turing style, and in fact Copeland & Proudfoot 1996 consider these machines an ‘anticipation of connectionism’. In my view they are new machines whose characteristics can only loosely be compared to the previous ones. Their really notable features can be summarized as the freedom in construction, the major role played by ‘interference’ that is the effects the environment produces on them, and the learning protocols that produce the changes (in terms of better performances) in the machines’ behaviours.

In the next part, I shall examine these characteristics one by one to show the differences between the PCM and the UPCM —provided it really can be built according to Turing. I will argue that he doubted whether it would be possible. The model for the unorganised machine was the brain with its neural connections, and not the human ability to calculate as in the previous Turing Machine. He wanted to model a machine on the hardware of the brain, with all its complexities and links with the external world. During the 1930s, he was fascinated with the idea of obtaining a substitute for a human function, that of calculating. The starting point in the two cases is very different. Only a machine whose architecture is similar to the brain could perform truly intelligent tasks. That is Turing’s belief in the 1948 report. The builder should set the machine free to connect units as suggested by brain structure:

we believe then that there are large parts of the brain, chiefly in the cortex, whose function is largely indeterminate. In the infant these parts do not have much effect: the effect they have is uncoordinated. In the adult they have great and purposive effect: the form of this effect depends on the training in childhood. (Turing 1948/2004: 424)

The building of the machine should take into account nature and neurological evidence. The first programming of the machine was not supposed to determine its future purposes and performances. The trainer would obtain the results from the device after a long and cumbersome learning process that started with a basic table of instructions and was activated by a random series of inputs and an external role of the trainer, who activated pleasure or punishment according to the performance offered by the machine and the desired task to achieve. This focusing of attention on neurological studies of the human brain was also shared by Wiener and von Neumann, as we shall discuss in later sections. The machine was not exhausted by its programming description. For example the P-type machine—a special kind of unorganised machine—was defined as an ‘LCM without a tape, and whose description is largely incomplete’ (Turing 1948/2004: 425). When the machine reached a configuration where the subsequent action was undetermined, there was a random choice of action to be tested successively and eventually cancelled as a consequence of the pain stimulus. If, after the random choice, there is a pleasure stimulus the choice becomes permanent. We have in this case some interesting new concepts applied to machines.

Characteristics of unorganised machines

First of all, random choice is the only tool that can be used with an incomplete description and it guarantees the introduction of new actions in machine behaviour that can be tested, sanctioned or approved. The judgement is external to the machine itself and consists of the method used by the trainer to exercise his/her educative action on the device. We must underline here that this activity represents the organization of the machine and it is performed with the help of an external ‘teacher’. The introduction of the concept of organization provides a new perspective on machines, their construction, their objectives and their ontology (if we are allowed to mention it).

Universality is something to be attained by the organization process, and it can be obtained only by the ‘interference’ of the external environment which in this case is the activity performed by the trainer to achieve the objective of education. Universality is described in this context as a final goal and not as a standard characteristic of the machine, whereas the logic machine was born universal and ignored every external interference. The differences between the abstract logic machine, known as the Turing Machine and the unorganised machine cannot be more apparent than in this discussion. The identity of the Turing Machine was univocally determined by the table of instructions, while in the unorganised machine we have an incomplete table of instructions with the inclusion of various random choices for the uncertain steps, and the tireless effort of the trainer is crucial to organizing the ‘identity’ of the machine by transforming its reactions to the stimuli. The external presence is fundamental for the machine which learns how to behave in certain circumstances with the help of environmental interference. In the education of children, external influences cannot be underestimated, since they are the vehicles through which they complete their entrance in the world and develop their capabilities. Turing claimed that the training of a machine should follow the example of the natural education process, including mistake correction and causality of some experiences. It is crucial in this context to understand the role of organization in defining machine identity, which cannot be exhausted by building and programming the machine, as was the case for the logical prototype machine. Turing changed his mind after his rethinking started in 1939 and included unpredictable elements in machine structure to obtain intelligent performances from it.

In the paper, a discussion can be seen on the possibilities of an equivalence proof between the practical and the theoretical machine (see Numerico 2005 for more details on this point) in which there is a precise assertion of Turing’s opinion on the uselessness of such proof: ‘This in effect means that one will not be able to prove any result of the required kind which gives any intellectual satisfaction’ (Turing 1948/2004: 416).

There are many other features of the new machine that stem from Turing’s perspective-change of machine characteristics, but I wish to stress just one last quality that the machine should possess in order to guarantee the effectiveness of the organization of the machine via a training process. This quality is ‘initiative’, which the device had to demonstrate in obtaining new results and creating new behavioural patterns. ‘Initiative’ is defined rather loosely, only in terms of its in opposition to discipline:

Discipline is certainly not enough in itself to produce intelligence. That which is required in addition we call initiative. This statement will have to serve as a definition. Our task is to discover the nature of this residue as it occurs in man and to try and copy it in machines. (Turing 1948/2004: 429)

Initiative is the capability that is residual after we have applied discipline to task performance. And there is no precise way to specify its characteristics. The idea is to allow the machine to make choices or decisions. It is not very clear in Turing’s terms how to introduce these capabilities into the device, while it is evident that this element plays a crucial role in creating an intelligent machine. According to one of the methods proposed by Turing to obtain a device with initiative, in fact, it is apparent that the characteristics of the Universal Machine are not sufficient to produce an intelligent device. Turing’s suggestions are unequivocal:

start with an unorganized machine and try to bring both discipline and initiative into it at once, i.e. instead of trying to organise the machine to become a universal machine, to organise it for initiative as well. (Turing 1948/2004: 430)

Organisation, initiative, error-checking and controlling, and the learning process, are the key elements of the new type of machine proposed in Turing’s paper. They are concepts linked to the project of creating a model of intelligence by using a mechanical device that should be similar to the neurological reactions of the human cortex in the passage from child to adult. This interest in the natural phenomenon of intelligence and in its possible mechanical reproduction is based on a new start compared to the logical model of the machine that was at the core of the stored-program computer project. This new start—which was rather controversial at this stage for Turing himself—was also shared by von Neumann and Wiener as I shall show in the following sections.

John von Neumann: the logic of self-reproducing automata

Contrary to von Neumann’s habit, his work on the theory of automata was solitary research, not funded by any governmental agency. He started his study soon after the project for a stored-program computing machine, in 1946 (Aspray 1990: 189, 317). However his first official presentation of results took place in 1948 at the Hixon Symposium. The progress and organization of the material produced by von Neumann on this subject is rather complicated due to the fact that many of the manuscripts related to it were incomplete at the time of his death, except for the text of the Hixon Symposium conference published in 1951. In considering these circumstances, I have decided to base most of my discussion on this paper and to mention the other works only marginally. I chose this paper also because the lecture was given in 1948, the date of Turing’s report analyzed in the previous section, and the first publication date of Wiener’s book on cybernetics. (Wiener 1948)

In his talk at the Hixon Symposium, von Neumann compared natural and artificial automata, trying out and offering some suggestions on how to emulate a natural automaton by an artificial one. The centre of his interest, though, was not the reproduction of intelligent behaviours, but the replication of some biological capabilities of natural automata, such as that of self-reproduction. Viewed from this perspective von Neumann’s approach is rather different from Turing’s. However there are some similarities in the two methods, because von Neumann looked for the crucial characters of natural automata to be reproduced in artificial ones. Another overlap is the comparison between natural and artificial automata in von Neumann’s work and the relationship described by Turing between the continuous controlling machine, such as the brain and discrete controlling devices, such as computers. According to von Neumann, in fact, ‘when the central nervous system is examined, elements of both procedures, digital and analogy, are discernible’ (von Neumann 1948/1961: 296). In this sense all comparisons between living organisms and computing machines ‘are certainly imperfect’ (ivi: 297). He was well aware of the fact that the comparison was an ‘oversimplification’, but needed to adopt it for argument’s sake, acknowledging that it was just a working hypothesis. This attitude was the same as Turing’s position on the relationship between brain and computer.

Another similarity was the critical view of traditional mathematical logic, in relation to the necessities of the logic of automata. Von Neumann’s approach to logic was even clearer than Turing’s thoughts. He clearly envisaged that one of the limitations of artificial automata was the lack of an adequate logic theory. The logic available, he stated,

deals with rigid, all-or-none concepts, and has very little contact with the continuous concept of the real or of the complex numbers, that is, with mathematical analysis. […] Thus formal logic is, by the nature of its approach, cut off from the best cultivated portions of mathematics, and forced onto the most difficult part of the mathematical terrain, into combinatorics […] . (von Neumann 1948/1961:303)

The all-or-none concepts cannot work in a context where the variables to manipulate are continuous, and in particular automata need to achieve certain results in certain time periods and there is always the chance of a component failure causing completely unreliable output. Considering this situation, von Neumann affirmed that: ‘it is clearly necessary to pay attention to a circumstance which has never before made its appearance in formal logic’ (ibid.). In order to tackle these new circumstances and to correctly represent the status of automata and their behaviours, it was necessary to invent or modify the formal logic so that it could take into account the new exigencies of automata modelling. Von Neumann’s vision was that it was likely that ‘logic will have to undergo a pseudomorphosis to neurology to a much greater extent than the reverse’ (von Neumann 1948/1961: 311). In order to create mechanisms analogous to those of natural automata, their logic had to transform itself in such a way as to welcome the characteristics of neurology itself. This could be interpreted as another connection with Turing’s theory of unorganised machines, as far as it implies a deep study of neurological knowledge in machines.

Von Neumann envisaged the need for an ‘exhaustive study’ on this subject, and in the meantime offered some suggestions on the differences with the traditional tools then available:

- The actual length of ‘chains of reasoning’, that is, of the chains of operations, will have to be considered.

- The operations of logic […] will have to be treated by procedures which allow exceptions (malfunctions) with low but non-zero probabilities. All of this will lead to theories which are much less rigidly of an all-or-none nature than past and present formal logic. They will be of a much less combinatorial, and much more analytical, character (von Neumann 1948/1961: 304).

Logic needs to be introduced in time spaces, taking into account reasoning-chain lengths, while traditionally it makes abstraction from any duration and it also has to consider error management in its operations. There are two opposing philosophies about error management in natural and artificial automata according to von Neumann. Natural automata tend to minimize the effects of mistakes or malfunctioning, because they can preserve the correctness of their operations even with the presence of some errors, while artificial automata try to maximize them so that they can be detected and removed more easily (see von Neumann 1948/1961: 306). As von Neumann wished, the new logic should be conceived ‘to be able to operate even when malfunctions have set in’, as happens in nature.

We can recognize a similarity between Turing’s treatment of mistakes as necessary in the evolution of behaviour and von Neumann’s hope of accepting mistakes in artificial automata’s operations as an inevitable consequence of a continuous process. Both scholars wanted to dispose of the excessive rigidity typical of the traditional logic approach which does not take into account the stimuli of real world situations. The perspectives are different though; while Turing considers mistakes as useful elements for the production of intelligence, von Neumann considers their presence as a probabilistic event needing to be taken into account in the evolution of the artificial automaton.

The notion of automata was not explicitly defined in von Neumann’s 1948 paper (see Aspray 1990: 189) as the notion of unorganised machines was not precisely sketched by Turing in his 1948 paper. This analogy can be representative of the primitive stage of study in both cases. There are, however, some common directions of research: they both linked the concept of these new machines with that of organization; they both compared the Universal Machine with their new device, looking for similarities and differences between the two. We have already seen the two issues treated in Turing’s report; I shall show them in relation to von Neumann’s approach.

It is beyond the scope of this paper to describe von Neumann’s actual contribution to the theory of automata (for a detailed analysis of this contribution see Shannon 1958, Ulam 1958, Burk’s introduction to von Neumann 1966: 1-28). I wish to underline the centrality of another declination of the concept of organization, that in von Neumann’s paper is identified with the intuitive and informal notion of complication. He very clearly outlined his objective in his work to create a systematic theory of automata: he wanted to rigorously define the concept of ‘complication’. His aim was to illustrate the following proposition: ‘This fact, that complication, as well as organization, below a certain minimum level is degenerative, and beyond that level can become self-supporting and even increasing, will clearly play an important role in any future theory of the subject’ (von Neumann 1948/1961: 318). Complication as well as organization plays a crucial role in von Neumann’s theory of automata, similarly to Turing, but while the British scholar imagined an external trainer and environmental effort to complete the organization of the unorganised machine, von Neumann was convinced of the possibility of building a self-reproducing automaton, whose characteristics would produce the output of a complication process, by solitary and adequate assembly of primitive elements, each with a precise task which all together would produce self-complication and consequently self-reproduction, which was the final goal of the whole process.

Von Neumann’s project was the creation of self-reproducing automata, using a sort of program, similar to the instructions adopted in Turing’s Universal Machine. The automaton should be created with ‘the ability to read the description and to imitate the object described’ (von Neumann 1948/1961: 314). However he imagined broadening the concept of the Universal Machine, whose output was too narrowly conceived to be a simple tape with symbols on it, while ‘what is needed for the construction to which I referred is an automaton whose output is other automata’ (von Neumann 1948/1961: 315). The model of a self-reproducing automaton is a generalization of the concept of the Universal Machine, including the possibility of producing another machine as the output of the instructions and of the connected parts. As in Turing’s later project the intelligent machine needed to be educated to the discipline (as a Universal Machine) but this was not enough. The machine needed to be given initiative, an extra capability that would result in creative gestures and achievements. At the same time on the other side of the Atlantic, maybe without being aware of the other’s work, von Neumann wanted to add to the Universal Machine the capability of producing other similar automata instead of a bare list of zeros and ones.

Turing and von Neumann, though starting from different perspectives and with different aims, shared a similar view about the future of machines, trying to integrate logic results with the necessities of time-dependent processes, and with their common interest in neurological and biological mechanisms that should be modelled so as to be integrated into machine projects. They both considered a revision of the traditional tool of mathematical logic necessary in obtaining an adequate resource to design these new devices, such as intelligent machinery or self-reproducing automata. They both discussed and integrated the concept of organization and self-organization (with the special declination in terms of complication by von Neumann) in the successful planning of a new generation machine. Learning, growing and reproducing itself were the new processes that needed to be performed by the new style devices, and in order for these activities to take place it was necessary to invest energy in developing a new logic (we can call it the logic of life and of dynamic processes) instead of the classical formal logic created during the first 30 years of the 20th century.

In the next section we shall concentrate on the dialogue on these ideas between von Neumann and Norbert Wiener, in order also to specify the contribution of Cybernetics to this new style of machine.

Von Neumann and Wiener on neurology

Norbert Wiener (1894–1964) is unanimously considered the father of Cybernetics, the discipline that studies control and communication in both animals and machines. According to Wiener, ‘the most fruitful areas for the growth of the sciences were those which had been neglected as a no-man’s land between the various established fields’ (Wiener 1948/1961: 2). The interdisciplinary character of Cybernetics was central to the scientific and ethical effort of the group of people sharing the ideas and procedures at the heart of this new discipline. Communication between scientists from different research areas was the decisive factor in the development of Cybernetics. Its interdisciplinary character, completely new compared to previous scientific enterprises, was based on a novel study of communication and control in animals and machines2. The conferences—where scientists from different backgrounds tried to communicate with each other, hence transforming their vocabulary and merging their ideas—were the starting point for publicizing this new research area.3

Wiener stated that communication and control were interconnected. According to him, control was nothing more than a special case of communication: ‘When I control the actions of another person, I communicate a message to him, and although this message is in the imperative mood, the technique of communication does not differ from that of a message of fact’ (Wiener 1950/1954: 16). Communication, indeed, was interacting with another person or a machine in an attempt to obtain some feedback from the other entity. In this sense, the feedback in the communication process was related to the second party in the communication interaction and was primarily accessible only to itself, not to the first party in this exchange. Wiener envisaged the centrality of communication beyond human communication. He clearly stated the thesis of his book The Human Use of Human Beings was that ‘society can only be understood through a study of the messages and the communication facilities which belong to it; and that in the future development of these messages and communication facilities, messages between man and machines, between machines and man, and between machine and machine are destined to play an ever increasing part’ (ibid.). One of the major goals of Cybernetics, as a trans-disciplinary enterprise, was the study of ‘the language and techniques that will enable us indeed to attack the problem of control and communication in general’ (p. 17). Wiener’s project consisted of a theoretical perspective as well as practical involvement in the details of applications in order to ‘find the proper repertory of ideas and techniques to classify their particular manifestation under certain concepts’ (ibid.).

The centrality of communication implied a series of interesting consequences in terms of the definition of the ‘ideal computing machine’. According to Wiener, in fact, the machine logic could be very peculiar: ‘we thus see that the logic of the machine resembles human logic, and, following Turing, we may employ it to throw light on human logic. Has the machine a more eminently human characteristic as well—the ability to learn? To see that it may well have even this property, let us consider two closely related notions: that of the association of ideas and that of the conditioned reflex’ (Wiener 1948: 126). Another element that should be considered part of logic is the capability of associating ideas, as described in Locke or Hume. Ideas tend to ‘unite themselves into bundles according to the principles of similarity, contiguity, and cause and effect’ (ivi: 127). Wiener was in line with Turing and von Neumann, in the strong belief that logic needed to be at least enlarged, if not changed, in order to include some arguments and methods appropriate to human logic, and adequate to the reproduction of such human processes in the machine.

His view on logic can be understood in this synthetic passage, in which he clearly stated that logic was an open system and not a closed set of postulates useful for every deduction:

I could not bring myself to believe in the existence of a closed set of postulates for all logic, leaving no room for any arbitrariness in the system defined by them […].

To me, logic and learning and all mental activity have always been incomprehensible as a complete and closed picture and have been understandable only as a process by which man puts himself en rapport with his environment. It is the battle for learning which is significant, and not the victory. (Wiener 1956: 324)

If we compare Wiener’s vision of logic to that of von Neumann and Turing, there are many differences on the conception of the simulation of the nervous system via a mechanical device. He had more faith in the possibility that the communication paradigm could be used to reproduce some macroscopic neurological sensations and behaviours. He was greatly interested in the possibility of ‘communicative mechanisms in general, or for semi-medical purposes for the prosthesis and replacement of human functions which have been lost or weakened in certain unfortunate individuals’ (Wiener 1950/1954:163). According to his vision, although ‘it is impossible to make any universal statements concerning life-imitating automata’ (ivi:32), there are some features that could serve the purpose of imitating life behaviours. ‘Thus the nervous system and the automatic machine are fundamentally alike in that they are devices which make decisions on the basis of decisions they have made in the past’ (ivi:33). The idea that the nervous system as a whole could be reproduced by a machine organized in terms of feedback and communication with the outer world is, thus, in contrast with the position assumed by von Neumann.

The debate can be better understood by reading a letter von Neumann wrote to Wiener in late 1946, aiming to set the agenda for a meeting4 between the two. In this letter von Neumann explicitly expressed all his doubts about the possibility of emulating the human nervous system or another nervous system by any kind of automaton because of its extreme complexity. In his view all the ‘Gestalt’ theories were incapable of helping make any progress in the comprehension of such a complex device, so placing him openly in contrast with Wiener’s conviction. The solution proposed in the letter was to decrease the complexity of the mechanism to reproduce, without losing its self-reproductive character.

I feel that we have to turn to simpler systems. It is a fallacy, if one argues, that because the neuron is a cell (indeed part of its individual insulating wrapping is multicellular), we must consider multicellular organisms only […]For the purpose of understanding the subject, it is much better to study an earlier phase of its evolution preceding the development of this high standardization-with-differentiation. […] This is especially true, if there is reason to suspect already in the archaic stage mechanisms (or organisms) which exhibit the most specific traits of the simplest representatives of the above mentioned ‘late’ stage.’ (Letter to Wiener from von Neumann cit. §9)

In order to avoid the complexity problem, von Neumann was ready to give up the model of the nervous system and choose some unicellular organism, capable of self-reproducing functions. This controversial point was not resolved in the meeting or in any successive public debate. Von Neumann continued to attend Cybernetics lectures, keeping intact his esteem for his original colleague, though the two were becoming increasingly distant in terms of their scientific agendas5.

Between the two American scholars there was a third ignored position, that of Alan Turing who was working on his theory of morphogenesis6 from the early 1950s. His research concerning computer simulation aimed at ‘a theory of the development of organization and pattern in living things’ (Copeland 2004: 508). Turing was analysing the shape of life starting from a description of the possible mechanism of the genes which determines the structure of the related organism. In a letter to the biologist J.Z. Young (dated 8 Feb. 1951) he admitted that his work on morphogenesis was connected with the possibility of understanding the structure of the brain.

I am really doing this [the mathematical theory of embryology] now because it is yielding more easily to treatment. I think it is not altogether unconnected with the other problem [the anatomical questions about the brain]. The brain structure has to be one which can be achieved by the genetical embryological mechanism, and I hope that this theory that I am working on may make clearer what restrictions this really implies. (Copeland 2004: 517)

Turing wanted to start from embryological mechanisms to arrive at a stage where brain structure can be explained and very likely reproduced, following the plans of Cybernetics. His interests moved along the same path as the Macy lectures group, and he was reflecting on similar issues to those of Wiener and von Neumann, though his position in Manchester did not permit adequate dissemination of his research results. If it were still necessary, this letter to Young furnishes evidence of the converging research programs of the three scholars.

Concluding remarks

Turing, von Neumann and Wiener shared similar subjects of study, focused on the theory of the organization, communication and development of a new kind of logic. It is impressive that also Turing, whose activity was based in the UK, without discussing his ideas with a group of scholars (with the exception of his sporadic attendances at Ratio Club7 meetings), was reflecting on the unorganized machine and on the behaviour of cells and the shape of life development.

Von Neumann’s effort to describe the theory of automata ended up with the following definition:

The formalistic study of automata is a subject lying in the intermediate area between logics, communication theory, and physiology. It implies abstractions that make it an imperfect entity when viewed exclusively from the point of view of any one of the three disciplines […]. Nevertheless an assimilation of certain viewpoints from each one of these three disciplines seems to be necessary for a proper approach to that theory. (von Neumann 1966: 9, emphasis in the original)

We can affirm that a different mix of these three disciplines was at the core of the research of the three scholars and that they never stopped the innovation process in defining the role of machines and their relationships with human beings, both in terms of communication with them and simulation of their characteristics. This paper is purely a first attempt at removing some of the prejudices surrounding these figures, and establishing an initial tentative reconstruction of their beliefs on the output of a new conception of machines.

I have argued that the three scientists, though working on different projects and concentrating on different scientific achievements, ended up following similar directions of research on the definition of a new status for logic, and the elaboration of new concepts such as organization, interaction, interference, error management and machine complexity. My view is that in 1948 a second revolution concerning computing machines started, and it was much more important than the mere project of the first calculating machine in 1945; the effects of this revolution are still visible now in the centrality of interaction, in the study of genetic algorithms, in research on bioinformatics and so forth.

References

Aspray W. (1990) John von Neumann and the Origins of Modern Computing, MIT Press, Cambridge (Mass.).

Copeland J. (2004) The Essential Turing, Clarendon Press, Oxford.

Copeland J. and Proudfoot D. (1996) ‘On Alan Turing's Anticipation of Connectionism’, Synthese, Vol. 108, N.3: 361-377

Cordeschi R. (2002) The Discovery of the Artificial, Kluwer, Dordrecht.

Davis M. (1965) The Undecidable, Raven Press, New York.

Davis M. (2000) The Universal Computer, W.W. Norton & Co., New York.

Evans C. R. and Robertson A. D. J. (1968) (eds.) Cybernetics, Butterworths, London.

Heims S. (1984) John von Neumann and Norbert Wiener. From Mathematics to the Technologies of Life and Death, MIT Press, Cambridge (Mass).

Ince D. C. (1992) (Ed) Collected Works of A. M. Turing: Mechanical Intelligence, North-Holland, Amsterdam.

Longo G. (2007) ‘Laplace, Turing and the ‘Imitation Game’ Impossible Geometry: Randomness, Determinism and Programs in Turing's Test’ in Epstein, R., Roberts, G., & Beber, G. (Eds.). The Turing Test Sourcebook, Springer, Amsterdam.

Numerico T. (2005) ‘From Turing Machine to Electronic Brain’ in J. Copeland (Ed.) Alan Turing’s Automatic Computing Engine, Oxford University Press, Oxford:173-192.

Shannon C. E. (1958) ‘Von Neumann's Contributions to Automata Theory’, Bull. Amer. Math. Soc. Vol. 64: 123-129.

Swinton J. (2004) ‘Watching the Daisies Grow: Turing and Fibonacci Phyllotaxis’, in Teuscher C. (Ed.) Alan Turing: Life and Legacy of a Great Thinker, Springer, Berlin: 477-498.

Turing A.M. (1937), "On Computable Numbers with an Application to the Entscheidungsproblem", Proc. London Math. Soc., (2) 42: 230-265,(1936-7); reprinted in Davis (1965):116-154.

Turing A.M. (1939), "Systems of Logic based on Ordinals" Proc. Lond. Math. Soc., (2), 45: 161-228; reprinted in Davis (1965), 155-222 and in Copeland (2004): 58-90.

Turing A.M. (1945), ‘Proposal for the development in the Mathematical Division of an Automatic computing engine (ACE)’, Report to the Executive Committee del National Physical Laboratory del 1945, in B.E. Carpenter e R. N. Doran (Eds) A.M. Turing's ACE report of 1946 and other papers, MIT Press, Cambridge Mass. 1986: 20-105, reprinted in Ince (1992) North-Holland, Amsterdam: 1-86.

Turing A.M. (1947), "Lecture to the London Mathematical Society on 20 February 1947" reprinted in Ince (1992): 87-105 and in Copeland (2004): 378-394.

Turing A.M. (1948), "Intelligent Machinery" Report, National Physics Laboratory, in B. Meltzer D. Michie (Eds) Machine Intelligence, 5, Edinburgh Univ. Press, 1969:3-23; reprinted in Ince (1992):107-127 and in Copeland (2004): 410-432.

Turing A.M. (1950), "Computing Machinery and Intelligence", MIND, 59: 433-460 reprinted in Ince, (1992): 133-160 and in Copeland (2004): 441-464.

Turing A.M. (1954) ‘Solvable and Unsolvable Problems’, Science News, in Copeland (2004): 582-595.

Ulam S. (1958) ‘John von Neumann’, Bull. Amer. Math. Soc, Vol. 64: 1-49.

Von Neumann J. (1948/1961) ‘General and logical Theory of Automata’, Hixon Symposium, reprinted in Taub A.H. (ed) Collected Works, Vol.V: 288-328.

Von Neumann J. (1958) The Computer and the Brain, Yale Univ. Press, New Haven.

Von Neumann J. (1966) Theory of Self-reproducing Automata (edited and completed by A.W. Burks), University of Illinois Press, Urbana.

Wheeler, M., Husbands, P. and Holland, O. (2007) The Mechanisation of Mind in History, MIT Press, Cambridge, MA.

Wiener N. (1948/1961) Cybernetics: Or Control and Communicationin the Animal and the Machine, The MIT Press, Cambridge (Mass).

Wiener N. (1956) I am a Mathematician. The Later Life of a Prodigy, The MIT Press, Cambridge (Mass.).

Wiener, N. (1950/1954): The Human Use of Human Beings. Houghton Mifflin, Boston.

Note about Turing references: Whenever possible Turing’s papers citations and page numbers are relative to Copeland 2004.

Notes

1 I would like to thank Oron Shagrir and Jack Copeland for inviting me to the International Workshop on the Origins and Nature of Computation in Jerusalem and Tel Aviv, I would also like to thank Jonathan Bowen for his kind help in editing and revising a first draft of the paper and Roberto Cordeschi for many useful conversations and stimuli on this subject.

2 See Cordeschi (2002) for details on the history and development of Cybernetics in the United States and Europe.

3 We refer here to various scientific conferences, such as Macy Conferences in New York and other similar meetings. For details on Macy, see Heims (1991).

4 The meeting took place at the MIT on the 4 December 1946.

5 See Heims (1984, chapt.10) for a more detailed comparison between the two approaches on this problem.

6For more information about Turing’s work on morphogenesis see the work of Jonathan Swinton available at http://www.swintons.net/jonathan/turing.htm and Swinton (2004).

7 See Wheeler et al. 2007 for more details on the Ratio Club.

Illustration credits

- 1 Courtesy of the author

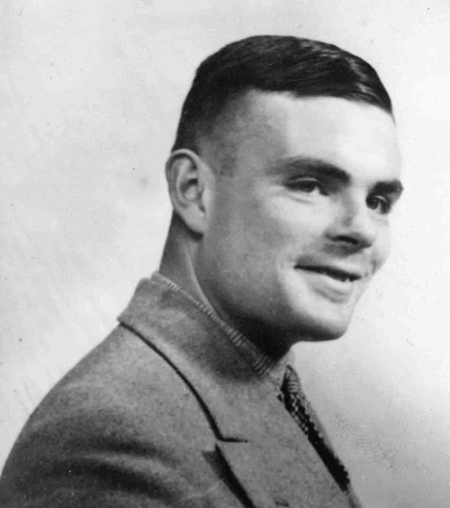

- 2 Source: Beryl Turing and King's College Library, Cambridge

- 3 Source: Public Domain, courtesy of St. Andrews University

- 4 Source: University of G�ttingen, 1912 available from http://upload.wikimedia.org/wikipedia/en/9/9f/Hilbert1912.jpg

- 5 Source: Archives of the Institute for Advanced Study, Princeton; photograph by Alan Richards

- 6 Source: P R Halmos, I have a photographic memory (Providence, 1987) available from http://www-gap.dcs.st-and.ac.uk/~history/BigPictures/Newman.jpeg

- 7 Source: United States Library of Congress, Prints and Photographs Division, ID ggbain.39219

- 8 Source: Beryl Turing and King's College Library, Cambridge

- 9 Copyright Time Inc.via Google Images LIFE Collection

- 10 Copyright Time Inc.via Google Images LIFE Collection