Artificial Intelligence Using P-Type Unorganised Machines

Ovi Chris Rouly

Introduction

A few years ago, while attempting to better understand the P-Type machine algorithm, an opportunity to speak with Dr. Jack Good at Virginia Tech (University) in Blacksburg, Virginia presented itself at the suggestion of Dr. Jack Copeland from the University of Canterbury in New Zealand. Dr. Good explained that he had worked with Turing at Bletchley Park and Manchester University in England and that it seemed to him that the essay "Intelligent Machinery" (Turing 1948) was, "never peer-reviewed" (personal conversation with J. Good in Blacksburg, VA. 2004). Further, it has always seemed curious why Turing would have chosen His Majesties Stationary Office (HMSO) to become the first public printer of the essay. Taken together, these observations somehow made it seem less curious that the P-Type algorithm had traveled a path of virtual obscurity since almost the day of its first printing. Nevertheless, there were still nagging questions.For example, it has always seemed ironic that within the "Intelligent Machinery" essay, Turing would explicitly call for the instantiation of the P-Type algorithm in electronic computing hardware at a time in the future whenever "some electronic machines are [were] in actual operation" (ibid.: 22). He made the call even as he commented, "I made a start on the latter but found the work [building the paper and pencil machines by hand] altogether too laborious at present". Thus, it seems reasonable to conclude that Turing actually wanted someone to build his P-Types, test them, and expand them if it were possible so to do. Yet, there remained this apparent contradiction of publishing the essay in a "never peer-reviewed" forum through a seemingly nondescript government printing office. Regardless, for over fifty years the algorithm went largely unexploited (Copeland & Proudfoot 1996/2004; Eberbach et al. 2004). Moreover, whether it was because of its HMSO pedigree or its lack of peer review polish, it was more than half a century after the algorithm was first printed (and it became available for study) that anyone publicly reported the results of efforts to instantiate the P-Type algorithm in electronic computing hardware. Here, the effort will be to try and change all that. Here the effort will be to show that, although the P-Type algorithm was forgotten for decades, it is actually an exquisitely simple engine and can be implemented quite effectively. This article will show that the P-Type algorithm can be instantiated. That it is accessible, is extensible, and is available for use.

Method

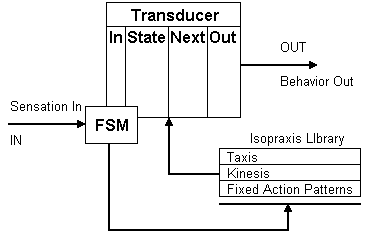

In his 1948 essay, Alan Turing described a simple computing machine (that he intended to be a machine intelligence) and a few results that came from his manual exercise of the device. In contrast to that description, this article reports three replication experiments intended to better understand the limits of the Turing algorithm and its instantiable potential. That is, the intent here is not to call upon the reader to recreate a particular methodology used or to validate a unique finding demonstrated. Rather, in the course of this article, an attempt will be made to describe actual instantiated, physical examples of the Turing machine and its embedded applications. The goal will be to examine the question of if there might actual be utility in the P-Type algorithm; to see if it can actually be built, so to speak.Thus, the methodology of replication begins with the Turing essay. But, it will be important to understand two things about the Turing algorithm as it was presented in 1948. First, Turing himself played an active but unique role in the process of describing, building, and operating his P-Type machine. Turing appears to have provided the functionality of a Finite State Machine (FSM) to the overall process, i.e., the algorithm, by repeatedly executing a finite set of rules essential to the construction and operation of the targeted engine. Second, the computing machine Turing (as an FSM) was creating in the narrative would today be called a Transducer. That is a, "device for which the output of the circuit [the machine] is not only a function of the specific instantaneous inputs, but also a function of the previous state of the system" (Cohen 1997: 161). Moreover, and perhaps the most significant feature of such a device is that, they may not necessarily halt upon the acceptance of an input word. Rather, such machines are routinely designed to "cycle-back" and wait at a convenient "starting point" while new input signals are accumulated. It seems this was true of the Turing invention. This functionality will show up abundantly later.

Another feature of the Turing Transducer was that the description or transition table within the Transducer was unorganised or (effectively) empty at the start. More too about this machine property will be discussed in the following sections. In his 1948 essay, Turing himself provided the functionality of a Finite State Machine (FSM) that directed the work of dynamically constructing a paper and pencil version of a P-Type machine. Subsequently, the machine (including Turing) showed continuous cybernetic (steered-feedback), self-organizing operation. Thus, by Turing example, each P-Type machine described here has two independent but "tightly coupled" parts: a FSM and a Transducer.

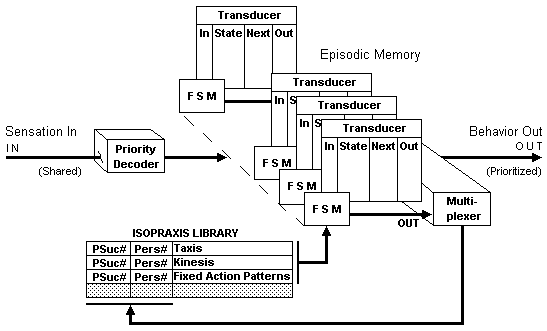

Figure 1 is a diagrammatic view of the operational kernel(s) of each P-Type instance used in the experiments described here. For the moment, one can ignore the block labeled Isopraxis Library since it is not from Turing. However, it is an application specific convenience added to the machine instances tested here and will figure prominently in all machine descriptions. The Library is a catalog of species-specific ethology. Using less anthropomorphic language: The Library is a source of potential behavioral outputs deemed relevant to the host and that can be used by the FSM to create Transducer transition table entries.

Per choices enumerated in the Turing essay, certain machine setup constraints were made and they remained constant across all of the experiments described here. First, there are no hidden memory units used. Second, the "next situation" (next state) is always chosen by pleasure-pain arrangement and not by sense stimulus or a memory unit. Pain affect is explicitly defined for each host physiology; pleasure affect is defined as the reduction of host-specific drives (Hull 1943), and all other internal processes related to non-pain and non-pleasure affect are treated as "tentative" indicators until either a pleasurable or painful affect are encountered.

As it is used here, an FSM-Transducer pair is a P-Type machine. If previously learned response behaviors exist in the Transducer, then those are always executed first. If there are no behaviors in the Transducer, then the process of self-organization begins. The process of self-organization begins with FSM writes. In all P-Type instances described here, an FSM can write only adaptive transition table entries, or behaviors, into their own respective "tightly coupled" FSM-Transducer pair.

Non-adaptive behaviors are defined as those behaviors tested in the current environment and found to produce painful affect or host-destructive results. Host-specific drives associated with avoiding painful affect or host-destructive situations do exist. Thus, adaptive behaviors are defined as those effects that ultimately (and possibly in concatenated sequence) produce pleasure by means of drive reduction. An adaptive behavior can be a lengthy sequence of behaviors producing several neutral and or pleasurable affect episodes but must ultimately terminate in the reduction of a host need or drive. Note: this makes a P-Type a sequence learner. Moreover, the reduction of a drive produces pleasure and pleasure, of course, becomes a teacher to the P-Type.

All rules for writing the transition table entries reside in the FSM. In operation, the FSM directs the writing of behavioral strategies into the Transducer in sequence order starting with the first attempted effect behavior. In this way, the P-Type becomes a penultimate transaction learner in that it is always ready to record the last effect transaction if the current affect transaction produces either neutral affect or an adaptive result. A simple queue mechanism allows for long sequences of queued behaviors to be tested in the environment while awaiting confirmation of their affective status or drive reduction success.

Behaviors known to produce painful affect may be associated with P-Type Transducers devoted to avoiding painful or host-destructive consequences. Behaviors that would typically produce neutral or pleasurable affect may be associated with Transducers associated with more common host ethology. In all cases, non-adaptive "tentative" behaviors are purged from their respective queues and are not recorded in the Transducer(s) if painful affect is encountered. Those behaviors that have produced neutral affect will remain in their respective queues until machine adaptive focus changes and or affect quality is decided by the host physiology. This method of mapping painful versus pleasurable affect onto specific P-Type machines permits an extension of the dialer mechanisms described in the Turing essay. More importantly, this mechanism provides a way to tailor heuristic drives to fit specific host/species characteristics.

The shortest write is just one behavior, or policy, long. During the research, it was found to be convenient to select behaviors from a prepared library of host-appropriate behavioral outputs. (See the Isopraxis Library mentioned above.) The longest write is exactly equal to the number of behaviors (or concatenated behaviors) left in the animate reserves of the host before the host expires by whatever cause. Transducer entries are (in their most crude form) just next state and output behavior tuples; the current state is assumed. Anticipated affect can be recorded in the Transducer for recognition purposes but will incur concomitant specificity and dimensionality problems. In principle, each FSM-Transducer pair is a computational engine whose purpose is to reduce one host need or drive. Overall, this makes the Turing P-Type is a gradient follower: a gradient follower that self-organizes.

Results

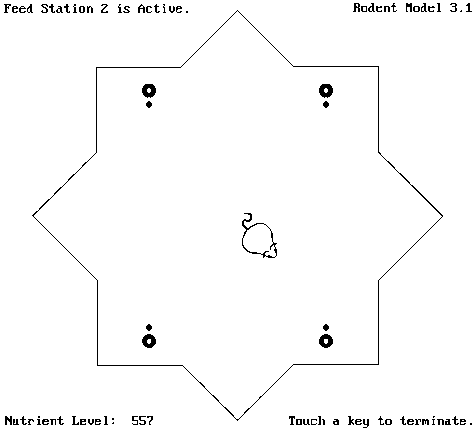

Rouly (2000) reported a method for instantiating the Turing P-Type machine. The most important parts of that method have just described. That article also reported achieving good results using the machines as controllers. Indeed, in separate experiments involving a situated and embodied P-Type, two adaptive controllers were initially created. The results of those two experiments supported their respective construction-oriented hypotheses.The first experiment involved a simple icon-mouse embodied as a mobile virtual agent situated in a simulated, 8-corner Hull enclosure. This experiment took place on a 2-D graphical surface that seems quite primitive by the "virtual world" standards. Fig 2 shows the first experiment as it appeared on a computer screen while in operation.

Figure 2 from Rouly (ibid.) the software version of the icon-mouse was in a simulated Hull enclosure and is viewed from overhead. Only one of the four feed stations (circles in four corners) was active at a time. Using a haptic sense and simulated olfaction the icon-mouse had to follow an olfactory gradient to the active feed station and then center the tip of its nose over the feed station in order to be fed. Between feedings, the icon-mouse was free to roam (at random) about the enclosure. The "mouse" did not have simulated vision. Moreover, it was also not a wall follower.

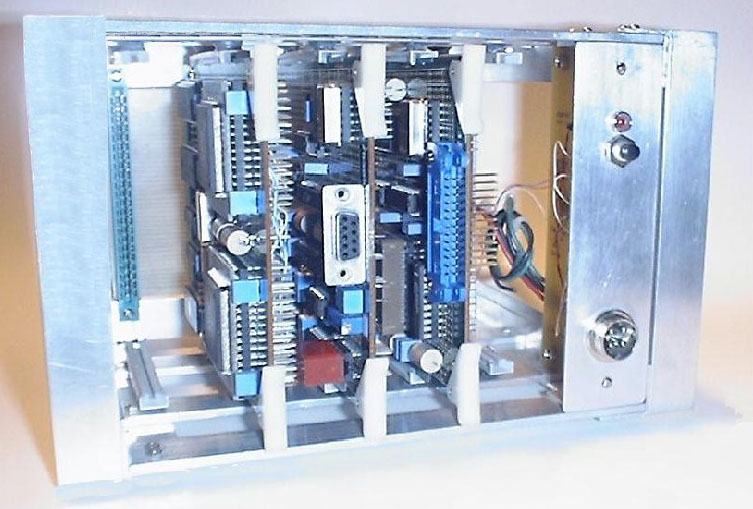

The second experiment reported by Rouly (ibid.) involved an embodied robotic rodent with its P-Type controller set in Field Programmable Gate Array (FPGA) technology. Like the virtual agent experiment before it, this was a situated experiment. But, in this case, this involved an artificial rodent robot in a large Hull enclosure, or training cage. The enclosure was almost a meter across and the rodent several centimeters long. In both of the former and the current experiments, the P-Types were not used as part of any artificial neural network as some have speculated was the intended purpose of the P-Type algorithm. Rather, each machine was a single-track, adaptive, serial bit-stream controller extended with tailored adjustments and taken strictly from the qualitative written descriptions Turing provided in his essay.

The goal of these first two experiments was to see if their artificial rodents could adapt to their surroundings using behaviors from a species-specific ethology, locate a food source using simulated olfaction, and to survive by eating a bit of simulated food. (Translated into less anthropomorphic language: the goal was for the embodied controllers to direct the joining of sensor affect with motor effect such that enduring control policies might emerge in the context of their respective situated environments.) Fig 3 shows the embodied robotic rodent whose P-Type controller was set in FPGA technology. The controller card cage was located remotely and connected to the rodent host by RF/IR telemetry. The P-Type controller and various support components are shown in Fig 4. The P-Type machine was mounted on the circuit card to the left in the card cage. Like the earlier experiments (ibid.), the "rodent" had to find its own food, i.e., it had to find the battery charging station in the enclosure and keep its own battery charged.

The first two examples were complimentary instantiations of the algorithm: one a purely software result and the other a hybrid result constructed in vehicular robotics and reconfigurable hardware, i.e., FPGA technology. In those experiments, the P-Type served as an adaptive controller (an agent) embodied by a mouse (an icon-mouse and a robotic rodent) situated in a virtual mouse enclosure based loosely on rodent training cages made famous by behavioral psychologists during the preceding century (Hull 1943).

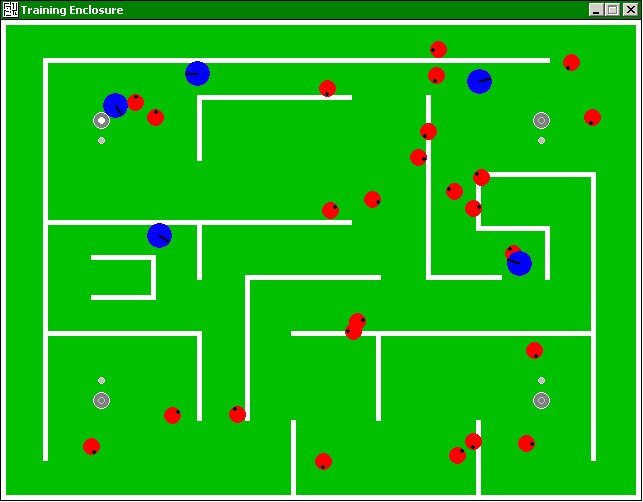

In Rouly (2007), the work moved directly into the domain of Multi-agent Systems (MAS). Moreover, this new experiment explored the extensibility of the Turing algorithm by parallelizing four independent P-Type automata as "agents" and packaging them as a single control "agency." In the Analysis section that follows, a diagram of how the four independent and parallelized P-Types were harnessed is presented. In fact, in this experiment, the P-Type controllers were explicitly constructed so as to cooperate and provide a single "agency" capable of reducing four primitive physiological and social drives derived from the Maslow Hierarchy of Needs (Maslow 1943). Each "agency" controlled one respective host icon-bug. Figure 5 shows the icon hosts in their maze at start-up as large predatory blue "bugs" and a food species of smaller red "bugs".

This experiment demonstrated how parallel copies of the P-Type algorithm might cooperate (be harnessed and multiplexed together) to control a single autonomous host in an explicitly social situation. At program start, the user selected how many predator and or prey icon hosts would inhabit the enclosed maze. In operation, the icon hosts were free to move about the maze until they were eaten, their energy levels were depleted, or they adapted, found available food, avoided their predators, and survived (Rouly 2007: 1). True to the spirit of the Turing P-Type algorithm, the behaviors exhibited by the icon hosts were emergent sequences. The icon hosts had differences in color, size, and markings, predators were distinguishable from prey, etc.

Analysis

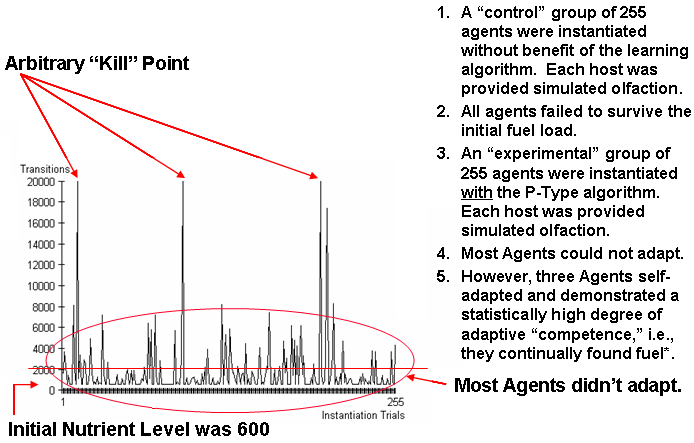

In "Intelligent Machinery", Turing described an instance of a theoretic computational machine that was capable of cybernetic self-organization. The replication experiments described here extended the P-Type Unorganised Machine and used it as part of larger machine intelligence research projects. Each machine demonstrated learning associated with teacher "interference" (Turing 1948: 19). In this case, Turing referred to "interference" learning (or behavior modification) as where a teacher perhaps human or otherwise, would deliver stimulus reinforcement to the machine intelligence as it responded to antecedent stimuli. Experience working with these machines also suggests that, perhaps, Turing intended for these machines to adapt whether their "teacher" was human or an arbitrary environmental stimulus provided as a feedback cue and interpreted by the machine as pleasure or pain affect. Next, the performance characteristics of two P-Type instantiations are reviewed. The first instantiation is simple, the second is more complex. Taken from Rouly (2000), the icon-mouse experiment, Figure 6 shows the results of the second of two, 255 trial runs of the "mouse" in its "enclosure." The figure depicts the results of the experimental trial as a continuous signal of repeated runs. The first set of trials, the control set, is not shown. This part of the Analysis section reviews the performance characteristics of the simplest P-Type instantiation.The first set of trials involved a control set of 255 "mice". These icon-mice had simulated olfaction but no P-Type learning engines. Thus, they could "sense" the odor of the food in the simulated enclosure; steering and moving towards it along a simulated olfactory gradient. However, because they had no P-Type learning engines, they could not learn from their experiences. The P-Type agents in the control group failed to survive the initial conditions and hysteretic hunger threshold. This result seems to be consistent with results reported in (Cioffi-Revilla et al. 2004) where the effect of memory on behavior was considered.

The second set of trials also involved 255 "mice". The result of this set of trials is the continuous signal shown in the Figure 6. These icon-mice were in the experimental configuration and enjoyed both olfaction and the benefit of P-Type adaptive learning. Most P-Type agents in the experimental group failed to learn how to survive yet several were marginally successful. Figure 6 depicts this "marginality" as the "noise" feature of the signal shown in the circled area. However, in particular, three "mice" did adapt to the enclosure during their existence. These are the three high "spikes" in the signal shown. These three P-Type machine controllers ultimately had to be extinguished due to time constraints. They had learned too well how to survive. For reference, post-mortem analysis of their Transducer contents revealed these "mice" had learned it was best to stay near the food and not to explore their enclosure.

Among the experimental group, an arithmetic mean of 2,870 and a non-biased standard deviation of 1,819 was calculated. It is clear that the performance of the three icon mice at the 20,000 life-time motor transitions level was well beyond the 6th deviation of all members of the population comprising their cohort. Simply put, the apparent success of their performance was significantly beyond any random sequence of events (Rouly 2000: 51).

The next implementation to be described is the icon-bugs experiment (Rouly 2007). This involves a MAS. Figure 5 is a screenshot of the simulation starting out with 30 predator and prey hosts in simultaneous operation. Each host was under the individual control of a separate, parallelized P-Type device. Figure 7 shows one of the parallelized P-Type machine intelligences used in the MAS experiment internally and diagrammatically.

In the 2007 experiment, where control authority over 30 individual hosts was required, each host had its own controller, or control agency. Additionally, it was determined that each agency would be given a set of four independent P-Type unorganised machines (acting as discrete drive reducing agents over the Maslow Hierarchy of Needs) and that these lower-level P-Type agents were expected to produce an aggregate control product at the higher-level agency. Figure 7 shows a block diagram of one such control agency. Each P-Type agent machine corresponds to one FSM-Transducer pair (block) in this diagram. A set of four P-Types made up one agency (ibid.: 2).

Discussion

Perhaps it is because of the confusion surrounding the first printing of the essay, "Intelligent Machinery", or perhaps it is because the P-Type description in the essay uses archaic technical language, but this particular Turing machine has been misunderstood, overrated, and underdeveloped at different times and for different reasons for over half a century. Turing created the little engine in 1948 to be a simple device whose purpose was to mimic a few of the observable artifacts of natural learning. Yet, the P-Type machine seems to be little more than a gradient follower or a hill-climber that can be operated in a noisy environment. However, what is interesting about a P-Type is that it will continually self-organize and attempt to follow a gradient, even one that is changing, for as long a time as it is allowed to operate. Furthermore, it can be constructed to fit into exquisitely small bits of computing hardware or it can be assembled into an expandable architecture of parallel-connected machines.The P-Type algorithm appears to share some of its attributes with the family of Reinforcement Learning algorithms. However, conservatively, the P-Type may precede (by decades) even the earliest prototype coming out of this latter body of computational research. Even then, the similarities are only qualitative. For example, in contrast to many Reinforcement Learning machines, a P-Type does not map its states onto the world (Kaelbling et al. 1996; Buconiu et al. 2008). Yet, like a Reinforcement Learner, the P-Type does respond to machine-exogenous cues that help it measure the effectiveness of potential behavior policies as they are tested and learned.

As a final point, when a host "expires" (or really at any time) the accumulated Transducer-stored "knowledge" (episodic memory) in the P-Type can be saved. Thus, although never intended to be a Lamarckian experiment, this memory transfer capability does produce interesting results and sets up future experiments. For example, since each Transducer can produce a tangible (disk/written) record of all acquired host-specific knowledge, those structures can be analyzed and processed off-line. In the very first icon-mouse experiment, sequential generations of "mice" were often given benefit of their predecessor’s "learned memories" as nothing more than a curiosity; just to see what value the transfer might have. Not surprisingly, "mice" that were more adaptive transferred what appeared to be improved survival skills on to subsequent generations. This might make for an interesting future experiment. Also, the "bugs" experiment (with the maze) stressed the spatial learning capacities of the icon-hosts. Although the "bug" code did not allow for easy trans-generational migration of memories, it was possible to do. And, like in the "mice" experiments before them, those bug-icons that inherited superior episodic (survival skill) memory traces did seem to be able to cope better with maze events when informally assessed by how long they survived before their starvation or capture by predators.

Summary

"Intelligent Machinery" revealed a computational machine whose purpose was to record adaptive behavior sequences and to be steered towards adaptive success. In particular, the essay described how to construct such a machine, detailing its attributes and functionality by example and quality. This article has reviewed three machine intelligence (replication) experiments in which P-Type Unorganised Machines were physically instantiated (based on the description in "Intelligent Machinery"). These experiments demonstrate the extensible nature of the P-Type by having it make decisions about individual and social behavioral choice when operating in the presence of dozens of other similar machine intelligences. These experiments show that parallel P-Type Unorganised Machines can steer an embodied host in a small-group social setting. They also show that the P-Type algorithm — forgotten for decades — can be implemented very effectively. Based on all of the foregoing, it can be conclused that the P-Type — an exquisitely simple engine — is accessible, extensible, and available for use.References

Buconiu, L., Babuska, R., de Schutter, B. (2008). A Comprehensive Survey of Multiagent Reinforcement Learning. IEEE Transactions on Systems, Man, and Cybernetics – Part C: Applications and Reviews. Vol. 38(2).Cioffi-Revilla, C., Paus, S. M., Luke, S., Olds, J. L., Thomas, J. 2004. "Mnemonic Structure and Sociality: A Computational Agent-Based Simulation Model." Proceedings of the Conference on Collective Intentionality IV, Certosa di Pontignano, Siena, Italy, 13-15 October 2004. Available from complex@gmu.edu.

Cohen, D. (1997). Introduction to Computer Theory. New York: John Wiley & Sons, Inc.

Copeland, B. J., Proudfoot, D. (1996). "On Alan Turing’s Anticipation of Connectionism". Synthese. Vol. 108, No. 3 (Sep. 1996), pp. 361–377.

Copeland, B. J., Proudfoot, D. (2004). "The Computer, Artificial Intelligence, and

the Turing Test". In Alan Turing: Life and Legacy of a Great Thinker, Teuscher,

C. ed, Heidelberg: Springer Verlag, pp. 317-351.

Eberbach, E., Goldin, D., Wegner, P. (2004). "Turing’s Ideas and Models". In

Alan Turing: Life and Legacy of a Great Thinker, Teuscher, C. ed, Heidelberg: Springer Verlag, pp. 159-196.

Hull, C. L. (1943). Principles of Behavior. New York: Appleton-Century-Crofts.

Kaelbling, L., Littman, M., Moore, A. (1996). "Reinforcement Learning: A Survey". Journal of Artificial Intelligence Research, Vol. 4, pp. 237-285.

Maslow, A. (1943). "A Theory of Human Motivation". Psychological Review, Vol. 50, pp. 370-396.

Rouly, O. C. (2000). Cybernetic Intelligence: A return to complex qualitative feedback theory. Las Cruces: New Mexico State University. Unpublished thesis.

Rouly, O. C. (2007). "Learning Automata and Need-based Drive Reduction". In Ha, Q. P., Kwok, N. M. (eds), Proceedings of the 8th International Conference on Intelligent Technologies, Sydney, Australia.

Turing, A. M. (1948). "Intelligent Machinery". N. P. L. Report.